What is Scalability?

Definition

When we want to define the term scalability in system design, we stumble upon a lot of confusion. Do we define it in terms of handling more or less work (scaling up and down) or adding more power like CPU or memory (Vertical scaling) or adding more machines to a system (horizontal scaling)?

A simple definition of scalability could be as following:

A system is scalable, when the cost of adding extra work, stays approximately constant.

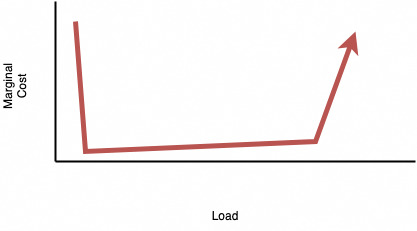

Single-Machine System Example

let’s say you have developed a very useful application and you want to share it with the world, you would have an initial spike in marginal cost when buying the hardware and starting the instance, like the following diagram shows.

Then the cost of running the application would be near zero because you don’t have more expenses for running it But when the hardware running the application reaches it’s limits when for example you have more users, then you would have to rethink the architecture of the system and at that point is when wise thinking of the software beforehand really shows it’s value.

Not being able to scale your business because you need to rethink the architecture first, is not a good thing. You would have to think thoroughly about the system and it’s needs before reaching that spike. Overthinking about the system scalability needs leads to overspending on things that you probably would never need and underestimating about them leads to roadblock at some point.

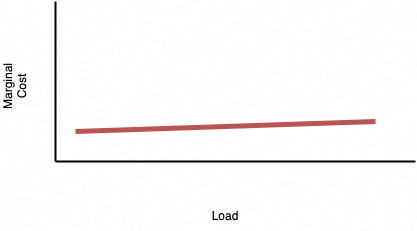

Serverless System Example

You can think of a serverless system like AWS S3 or lambda services. The cost of adding extra work in these services is nearly constant, because you pay per-unit.

Scalability in the serverless systems works both up (by not having spikes when the load exceeds to available) and down (by not having an initial spike), the downside though is that, the cost of running it is not near zero, so you would have to think about the economics of units.